Automated conversational product

This product allows clients to have an automated text conversation with their subscribers to positively influence metrics.

-

Context

Attentive is a text messaging marketing solution primarily for brands to reach their subscribers to drive sales.

-

Problem / opportunity

Leadership and our clients believed there was opportunity to increase sales through conversational text messaging (engaging through back-and-forth texting with subscribers). However, we didn’t have a product for our clients to test varying use cases and see what might drive higher sales.

-

Business goal

To increase client revenue (and in turn help decrease client churn). Leadership wanted our team to prove conversational text messaging could lead to higher revenue than standard one-way text messaging.

-

Product goals

Drive adoption: 20% of clients within 2 months will have at least one active automated conversation

To test 8 conversational use cases brainstormed by the team with at least 5 clients each within the first 3 months. (Although we didn’t have control what metrics these tests would move, this would get us closer to understanding if any use case might contribute to higher revenue.)

We discovered an opportunity…

The newly formed Conversational team (myself, my pm and a handful of engineers) were tasked with showing how a conversational product could drive higher revenue for our clients. This was one of the company’s ‘biggest-bets’ and we were determined to maximize our testing of Conversational use cases to find metric-moving patterns.

First, I uncovered and synthesized research already started months before. A survey was sent out to around 400 clients to gauge interest. A few takeaways:

There was lots of interest in conversational text messaging; however, in interviews it seemed most lacked confidence in how to start a conversation to move metrics.

Around 40% (the majority) deemed auto-response functionality as the most important feature; in interviews we heard most clients don’t have the bandwidth to support a human texting back to their subscribers .

Clients were most interested in conversational use cases that included driving the subscriber to a purchase.

We also completed a competitive gap analysis to understand where we stood in comparison to other companies. A few takeaways:

All core competitors added a self-service conversational product within the last year. However, most of these competitors offered a very limited product with only 2 of 6 offering a comprehensive automated conversational product.

Conversational competitors utilized human agents to concierge with clients’ subscribers instead of a self-service automated product.

We defined our problem...

Our clients wanted to more deeply engage with their subscribers through text conversations. However, they didn’t have a way to engage in text conversations with subscribers and furthermore couldn’t test what type of engagement would lead to more sales.

We hypothesized an automated, conversational product would open up opportunities for our clients to understand their subscribers and drive more sales.

Through a quick confidence, impact, risk exercise, the team decided to start with an automated solution. We felt we could maximize the amount of use cases we could test with less effort. There was a risk in this option. We assumed using human agents might drive higher revenue since our automated solution wasn’t robust enough to detect subscriber intent. However, we could still invest in human-powered tests alongside our automated tests the following quarter.

From here we explored our ‘How might we’s’:

How might we give customers the ability to conversationally text with their subscribers to help drive purchases…

…and how might we do this using little to no client resources.

We ideated...

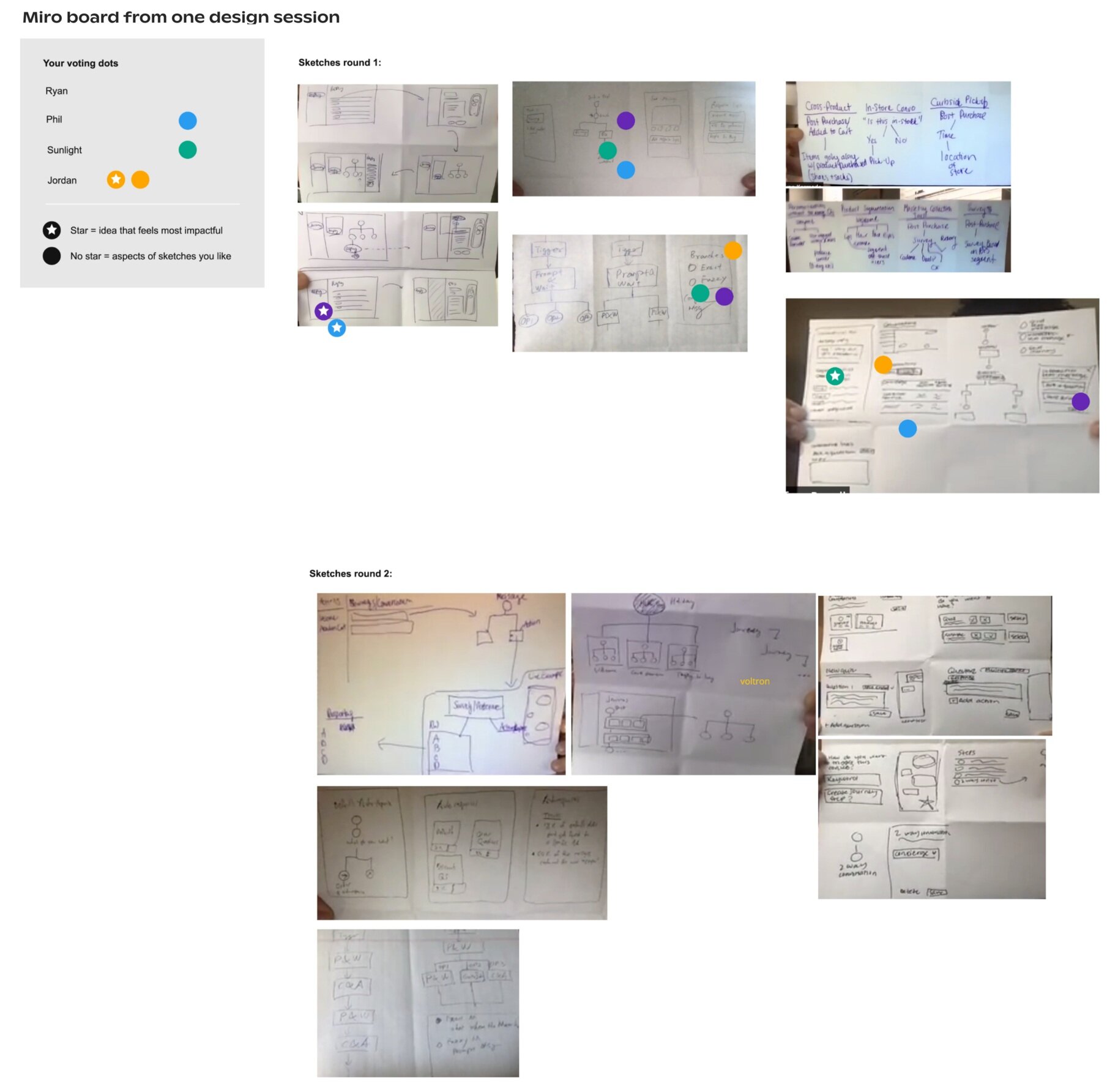

I led 2 design workshops with PM’s, Customer Success Managers, Engineers, Product Marketing Managers, and other Designers to ideate solutions around our ‘how might we’s’. A variety of perspectives under the right conditions can massively contribute to solutions vs. one person ideating alone. The workshops were a great way to gain initial alignment and understanding in a very ambiguous space.

We designed and tested...

After aligning with product and engineering on basic approach, I created lo-fis based on all inputs we’d gathered thus far.

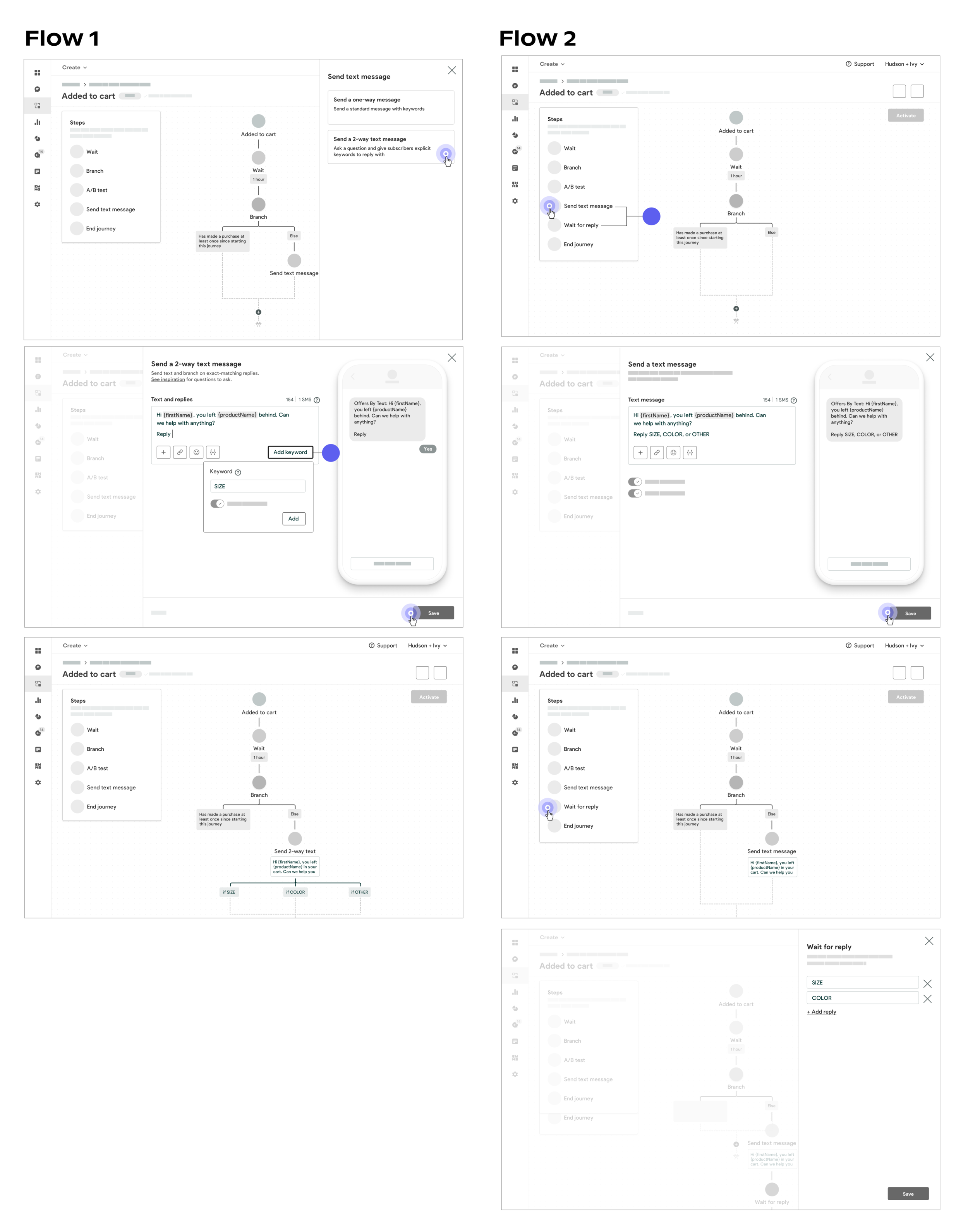

Quick sketching sessions and inspiration from a competitor analysis led to 2 flow options below. Both flows had UX and engineering pros and cons to consider. We would run these past clients to better understand their mental modal and hopefully tip the scale in favor of one approach. Generally, while flow 2 might allow for more flexibility in the future, flow 1 may better prevent client errors and instill best practices.

In flow 2, a client would need to add 2 steps to complete the automated conversation compared to flow 1 in which the client could ask a question and add reply keywords within one step.

In addition, in flow 2 we were worried a client might forget or misspell one of the reply keywords in the ‘Wait for Reply’ step without a reference to the previous step.

Next, I created a plan to interview 5 clients from different tiers. Primary research goals:

Understand the mental modal in how clients think about starting a conversation via the platform

Understand how much handholding clients need

Understand how much conviction or thought clients naturally put into their end subscriber experience (I.e. do they have conviction what their subscriber should receive if they respond with something other than one of the presented options?)

Signals to any other necessary functionality to drive adoption for the MVP

Generally, I approached the 30-45 minute interviews with:

A few open-ended questions about how they might utilize conversational texting and why

A nondescript screen of the platform and asked how they would approach a use case they cared about

One of the flows to walk through and talk out loud about what the client is thinking; would repeat with the other flow and ask them for likes, dislikes, and why

General wrap up questions and anything else a client wanted to add

After the 5 interviews, we pulled out patterns and I presented the takeaways to my team. Below is part of the takeaways report.

To gain alignment with both product and engineering on the MVP, I led an affinity mapping session. We came out with clear ‘musts’ for the product that would inform the hi-fi prototype.

I created hi-fis and tested...

We honed in on hi-fi designs and a clickable prototype based on Flow 1. Since clients didn’t have an obvious mental modal on how to carry out an automated conversation, the handholding, error-proof approach of Flow 1 resonated more.

A few hi-fis below + decisions made:

1. A low-cost design and engineering solution to give clients more inspiration in how to start a conversation

2. Defaults to set clients on the right foot based on lack of conviction in lo-concept testing

3. A very flexible mad-lib approach to provide better defaults for clients. This approach would allow us to acquire and parse through a mass amount of data from all our clients to continually suggest higher performing replies based on client industry and goals.

4. Reply format options to handhold clients. We realized a good percentage of clients were setting up their replies in a less intuitive way for their subscribers. Handholding them through this would ensure they were capitalizing on their reply rate with formats we deem high performing from our data. We provided examples for each format type since a few lo-concept participants were confused about the 2 options.

5. A reference to the text message a subscriber would see. We believed this would not only give client’s peace of mind, but would also put them into their subscriber’s shoes as they were creating this conversation.

6. Once the step is saved, the focus stays on the conversational step so clients can pick up where they left off. The replies branch out from the conversational step which aligns with client expectations from lo-concept testing.

As a team we decided to move forward with hi-concept testing while backend engineers started pulling together requirements. I created an interview plan for unmoderated hi-concept testing through usertesting.com with 3 user testing panelists, 3 clients, and 3 Customer Success Managers. Goals of this testing included:

Expectations matching the experience

Copy to be clear and providing users with enough information to take action

(If time) Signals on future-forward ideas

I synthesized takeaways from the interviews and created a highlight reel to present to my team. A few high-level takeaways in a report below.

We landed on an intuitive and scalable experience...

With our learnings from hi-concept testing I made tweaks to the prototype that included: clearing up confusing copy, adding a link for tips, and introducing a few templates within our already-created templates section.

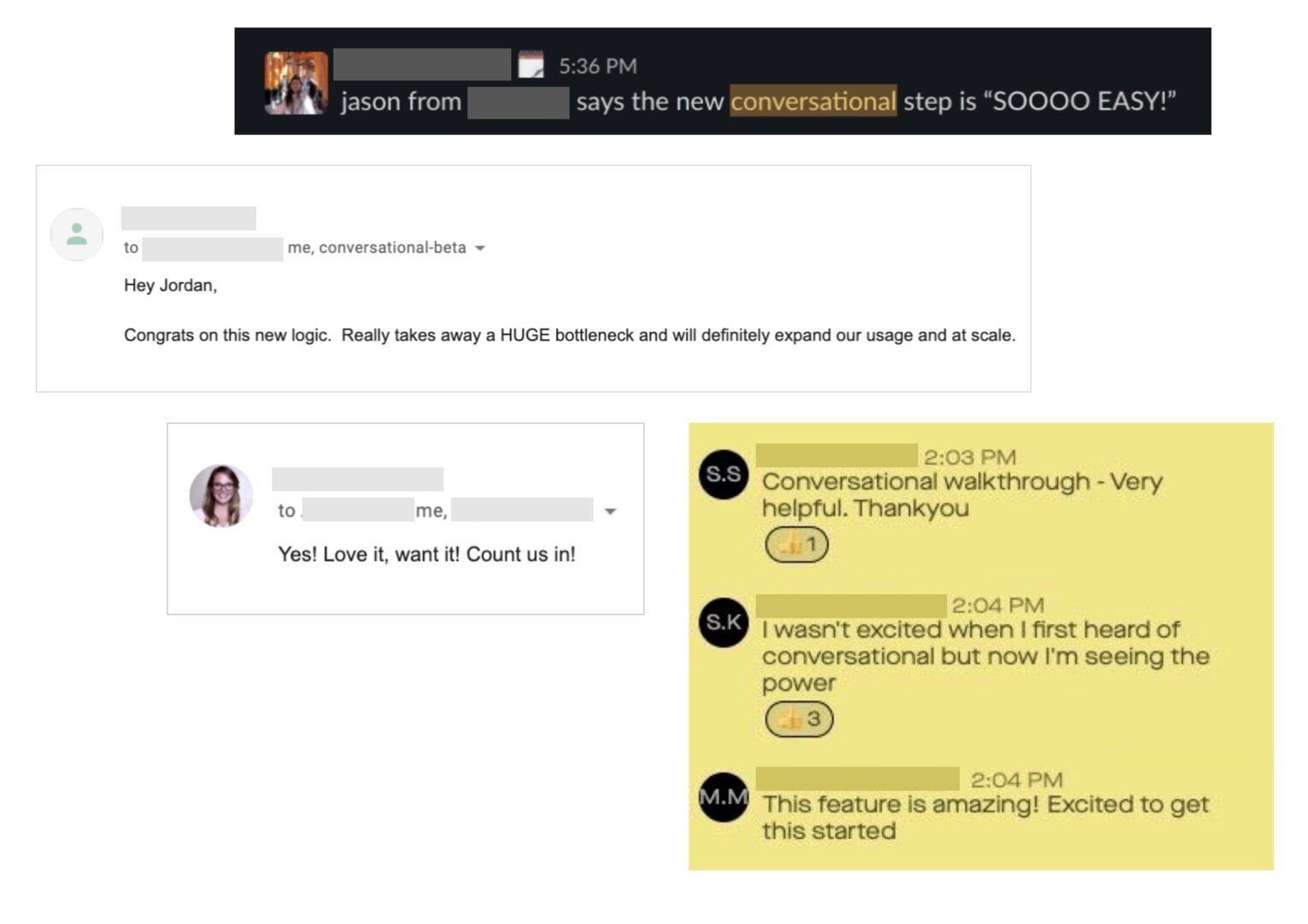

As a team we felt there were no red flags and we were ready to beta. Although I left before the product launched we started to see a lot of early excitement and positive sentiment (screenshot below). However, early anecdotal feedback is not a great gauge on success.

If I stayed with the company longer I would have looked forward to:

Tracking client adoption and following up with clients and Customer Success Managers if we weren’t hitting our goal.

Helping organize a team to test our conversational use cases. Ideally we could get more than 10 clients per test since there would be inevitable variables between each client conversations and the subscribers they’re engaging with. After 2 months the team could pull up and see if there are any patterns in metrics being moved per specific use case and continue testing.